Speakers

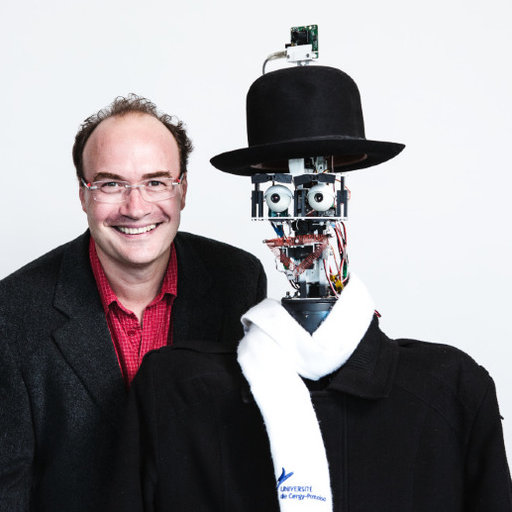

Philippe Gaussier -ETIS laboratory, ENSEA, University of Cergy Pontoise, CNRS, Paris, France

Philippe Gaussier -ETIS laboratory, ENSEA, University of Cergy Pontoise, CNRS, Paris, France

Robot and rat navigation: a model of the interactions between the retrosplenial cortex and the hippocampal system

Place recognition is a complex process involving idiothetic and allothetic information. Using local view extracted around some feature points in the visual environment can provide important information to define a visual compass and to accurately recognize a place even when the environment changes significantly. In that case, a central process relies in the building of conjunctive cells merging “what” and “where” information related to the different local views. A short-term memory is also necessary to transform the sequential exploration into a global code charactering the place. In previous works, we have shown such a strategy is efficient to obtain homing behaviour on mobile robots after the unique learning of very few places around the goal location when a soft competition in used to determine the winner landmarks. We suppose the visual information coming from the temporal and parietal cortical areas are merged at the level of the entorhinal cortex to build a compact code of the places. In recent works, we propose the retrosplenial cortex is implied in the computation of path integration. This information is next compressed at the level of EC thanks to several modulo operators. Applying the same principle to visual information allows to explain both grid cells related to path integration and those related to visual information. We end with a global model of the hippocampal system, where EC is dedecated to building a robust yet compact code of the cortical activity while the hippocampus proper is learning to predict the transition from one state to another. This model can provide interesting insights to solve scaling issues for long-distance navigation in complex environments.

Auke Ijspeert – EPFL, Ecole Polytechnique Fédérale de Lausanne, Lausanne – Switzerland

Auke Ijspeert – EPFL, Ecole Polytechnique Fédérale de Lausanne, Lausanne – Switzerland

Studying locomotion with a neurorobotics approach

The ability to efficiently move in complex environments is a fundamental property both for animals and for robots, and the problem of locomotion and movement control is an area in which neuroscience, biomechanics, and robotics can fruitfully interact. In this talk, I will present how biorobots and numerical models can be used to explore the interplay of the four main components underlying animal locomotion, namely central pattern generators (CPGs), reflexes, descending modulation, and the musculoskeletal system. Going from lamprey to human locomotion, I will present a series of models that tend to show that the respective roles of these components have changed during evolution with a dominant role of CPGs in lamprey and salamander locomotion, and a more important role for sensory feedback and descending modulation in human locomotion. Interesting properties for robot and lower-limb exoskeleton locomotion control will also be discussed. If time, allows I will also present a recent project showing how robotics can provide scientific tools for paleontology.

Dominique Martinez – University of Lorraine – LORIA, Nancy – France

Dominique Martinez – University of Lorraine – LORIA, Nancy – France

Neural computations of olfactory navigation

I will present my work in computational neuroscience and neuro-robotics aiming at understanding the odour-guided behaviour of animals, especially insects. At the application level, the challenge is to create innovative olfactory sensors and robots that reproduce certain aspects of animal behaviour. A virtuous circle is created where biological models benefit from robotic experiments and inspire them in return. The most demonstrative result concerns a hybrid robot with insect antennae as biosensors for the detection and localization of chemical sources.

Ilya Rybak – Department of Neurobiology and Anatomy, Drexel University College of Medicine, Philadelphia, PA, USA

Ilya Rybak – Department of Neurobiology and Anatomy, Drexel University College of Medicine, Philadelphia, PA, USA

CPG-based control of locomotion: Insights from spinal cord circuit organization

Neural control of locomotion involves interactions between different levels of the nervous system and the environment. Circuits in the spinal cord integrate supra-spinal commands and sensory feedback to control locomotion. We use computer modeling to uncover the organization of spinal locomotor circuits and their interactions with limb biomechanics and the environment. According to the current biological concept, each limb in legged animals, including mammals, is controlled by a separate central rhythm generator (CPG) located in the spinal cord. Each CPG is able to generate rhythmic locomotor activity based on intrinsic properties of CPG neurons and their interactions. The operation of the CPGs and locomotor frequency defining locomotor speed are controlled by multiple supra-spinal (particularly, brainstem) signals and sensory afferent feedback. In turn, limb coordination and gait are defined by mutual interactions between the CPGs through spinal commissural interneurons, mediating left-right CPG interactions, and long propriospinal neurons, involved in fore- and hindlimb interactions. Recent optogenetic and molecular reproaches allow linking specific types of genetically identified spinal and brainstem neurons to behavior, shedding light on their role in controlling locomotor patterns, speed, and gait. However, the connectivity between the distinct populations of spinal neurons and underlying mechanisms remain poorly understood. We try to fill this gap in knowledge using computer modeling. We have utilized the novel genetic and molecular data in a series of computational models of spinal circuits involved in the central and afferent control of CPG-based locomotion. The models of spinal circuits were integrated with the biomechanical models of limbs and body, allowing the investigation of different aspects of legged locomotion. The models reproduce and proposed explanations to multiple experimental data concerning control of legged locomotion under different conditions. The integrated study of neural control of locomotion provides important insights for the CPG-based control of legged robot locomotion.

Gentaro Taga – University of Tokyo, Tokyo – Japan

Gentaro Taga – University of Tokyo, Tokyo – Japan

Dynamical identification of individual difference in spontaneous movements of young infants

The first human movements that emerge in fetal and infant periods are spontaneous movements of the whole body including the limbs, and referred to as general movements (GM) (Prechtl 1990). The GMs are characterized by complexity and fluency (Prechtl 1990, Taga et al. 1999, Gima et al. 2019). The GMs are assumed to exhibit the developmental status of the brain (Hadders-Algra 2007) and are associated with long-term developmental disorders such as the cerebral palsy (Kanemaru et al. 2014), the developmental delay (Kanemaru et al. 2013) and the autism spectral disorder (ASD) (Gima et al. 2018). While recent advancement on brain imaging of young infants has unlabeled the spontaneous and stimulus-driven dynamics of the brain in early infancy (Taga et al. 2003, Homae et al. 2010, Watanabe et al. 2017), neural mechanisms underlying GMs are not well understood. In the present study, we asked whether the GMs of typically developing infants have individual differences in dynamical properties. To answer the question, we constructed a methodology for identify individuality of GMs in terms of dynamical systems. First, we obtained 3D movement trajectories of four limbs of 58 3-month (3M) old infants for 10 minutes by using 3D motion analysis system. The time series were differentiated to provide velocity time series with 12 D. The time series data were splitted into the first and second half as memory and test data, and time delay embeddings were performed for each of data to reconstruct movement attractors of nonlinear dynamics in phase space (Sugihara & May 1990, Ye & Sugihara 2016) as shown in Fig.1. Then, we proposed an algorism to calculate time evolution generated by the reconstructed dynamics of memory data when external input was given (a similar algorithm was proposed by Tsuda et al. 1992). One test data of the same infant or other infants was chosen as input to memory data dynamics. As a result of computer simulation, we found that the memory dynamics produced highly entrained time series by the input only when the input was generated from the same infant. Finally we tested if individual identification among all of the infant data was possible by examining the magnitude of entrainment. We found the rate of successful identification was more than 80%. The result of this study proved that GMs of young infants have individual differences in dynamical properties. Furthermore, the proposed method would be widely applied to examine how experimentally obtained spontaneous dynamics respond to external input. The present study may also serve a dynamical model for maintaining self-identity based on the global entrainment (Taga et al. 1991) between the memorized and present dynamics

Hakaru Tamukoh – Kyushu Institute of Technology, Kyushu – Japan

Hakaru Tamukoh – Kyushu Institute of Technology, Kyushu – Japan

Brain-inspired artificial intelligence model for neurorobotics

It is expected that home service robots support human memories and assist human decisions. For this, acquiring episodic memory in daily life, value judgments for acquiring the memory and prediction based on the memory are required for home service robots. This presentation shows a brain-inspired artificial intelligence model by mimicking the brain and applies the model to home service robots. The proposed model has functions of the hippocampus, amygdala, and prefrontal cortex, and can acquire episodic memory from few experiences and predict future events. We show several demonstrations in which a home service robot learns time-series events in a restaurant and estimate the meaning of “that” via few human-robot-interactions. Through this presentation, we show the effectiveness of the brain-inspired artificial intelligence model and discuss its importance for neurorobotics.